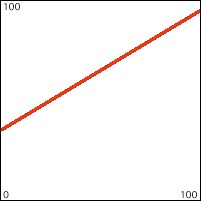

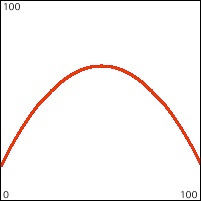

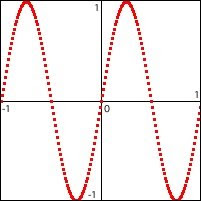

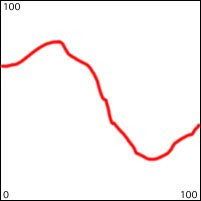

The idea is that when a control function has a shape that is in some way distinctive and memorable, and that shape is used to control one or more parameters of a sound, the shape is exemplified by the sound, and the resulting sound will be recognizable when it recurs. In this way, it can function like a musical motive. And like a musical motive, it can remain recognizable as related to its original occurrence even if it undergoes certain modifications or transformations. Some typical types of transformations of a musical motive are rhythmic augmentation or diminution (multiplying the duration by a given amount to increase or decrease its total duration, and thus decrease or increase its speed), intervallic augmentation or diminution (keeping the contour the same but changing the size of its vertical range), transposition (moving it vertically by adding a certain amount to it), or delay (this is implicit in anything that recurs, because music takes place in time; delay is crucial to musical ideas such as imitation, canon, etc.). All of these can be crudely emulated mathematically with the simple operations of multiplication (to change the size of the shape horizontally and/or vertically) and addition (to offset the shape vertically or in time). Even as a motive is distorted by these manipulations, it retains aspects of its original shape, and its relationship to its original state can remain recognizable, serving an aesthetically unifying role.

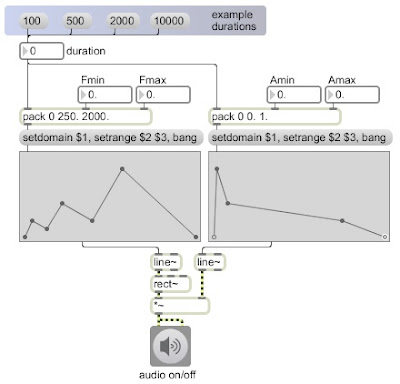

Using the same shapes as were used in the example on line segment control functions, let's see how the shape of a control function can be distorted horizontally and vertically with multiplication and addition (i.e., by changing its range, transposition, duration, and delay). These multiple versions of the same shape can be assembled algorithmically into a musical passage. We'll use the same shapes as control functions, and apply them in the same way as before, to the pitch and amplitude of a simple synthesized tone. This program shows one way to compose and synthesize a sequence of related sounds using variations of a single control function.

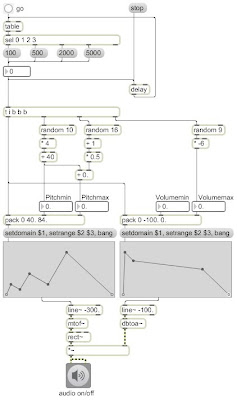

The shapes stay the same, but their transformations are chosen pseudo-randomly within specific ranges. The durations, which are also used as the delay time before starting the next shape, are chosen from among four possibilities: 100 ms, 500 ms, 2 sec, or 5 sec. The first duration will cause very short notes in which the envelope functions just have a timbral effect. The second duration is also a short note length (1/2 sec), but is just enough time for us to make out the shape within the note. The next two durations are definitely long enough for us to track the shape of the control functions as they affect pitch glissando and volume over the duration of a short "phrase".

The durations are chosen using a distribution of probabilities. An important thing to notice about probabilistic choices of durations is that long durations take up much more time than short durations. So if we used an equal distribution of probabilities, the long notes would take up way more of the total time than the short notes, and it would give the effect of the long notes predominating. The proportion of the given durations is 1:5:20:50, so if each duration were chosen with equal likelihood, the 2-second and 5-second notes would take up 70/76 (92.1%) of the total time. To counter that effect, we can use a distribution that is the inverse of the proportions of the note durations. (See inside the table object.)

As in the earlier cited example, we use function objects to draw the function shapes, for each note we set the range and domain to the desired values, and then we bang the functions to cause them to send their information to the line~ objects.

It's important to note that in this example, instead of the line segment functions controlling frequency and amplitude of the synthesized sound directly, they control the subjective parameters pitch and volume, specified as MIDI note names and decibels, respectively. Much has been written on the logarithmic nature of subjective perception relative to empirical measurement. This is particularly demonstrable in the case of musical pitch and loudness. We perceive these subjective traits as the logarithm of the empirical sonic attributes called fundamental frequency and amplitude. Fortunately, that logarithmic relationship makes it fairly easy to do a mathematical translation from a linear pitch difference or loudness difference into the appropriate exponential curve in the physical attribute of frequency or amplitude. The formula for translating MIDI pitch m into frequency f, according to the 12-notes-per-octave equal-tempered tuning system is f=440(2^((69-m)/12)). Using the reference frequency of A=440 Hz, which is equivalent to MIDI note number 69, multiply that times the (69-m)/12th root of 2. Max provides objects called mtof (for individual Max messages) and mtof~ (for signals) that do this math for you. The formula for translating volume in decibels d into amplitude a is a=10^(d/20). The amplitude a is calculated as 10 to the power of (d/20). This makes a reference of 0 dB equal to an amplitude of 1 (the greatest possible amplitude playable by Max signal processing), and all negative decibel values will result in an amplitude between 1 and 0. Max provides objects called dbtoa and dbtoa~ to do this math for you.

The arguments in the line~ objects might look a little strange, and they're not very important; they were chosen just to keep the program from making any sound when it's first opened. There's not really a MIDI pitch number that corresponds to a frequency of 0 (which would stop the rect~ oscillator)--MIDI pitch number 0 is a super-low C that would actually have a fundamental frequency of 8.1758 Hz--but -300 is a hypothetical MIDI number that would slow the oscillator down to about 0 Hz. Similarly, there is no decibel value that would truly give an amplitude of 0 (it would have to be negative infinity!), but -100 dB is equal to an amplitude of 0.00001, which is for all practical purposes inaudible.

Now let's look at the actual numbers being used for the pitches and volumes. The volume (peak amplitude) of each note will be one of nine possible decibel values, ranging from 0 dB (fff) to -48 dB (ppp) in increments of 6 dB. That's a pretty wide dynamic range for the peak amplitudes; it's at least as wide as most instrumentalists habitually use. Of course, recording systems need a much wider range than this to record all the subtleties of sounds in their quietest moments, but I'm just referring to the dynamic range of the peak amplitudes (the attacks) of the notes. The low end of the pitch range for each note will be one of 10 possible pitches, ranging from E just below the bass staff (MIDI 40) to E at the top of the treble staff (MIDI 76), in increments of a major third (4 semitones). The size of the range (the difference between Pitchmin and Pitchmax) will range from 0.5 to 8 semitones (from 1/4 tone to a minor 6th), as measured in 16 quarter-tone steps. This gives a possibility of some relatively wide glissandi and some very subtle small ones.

To test this program, just start the audio and click on the go button. Notice that most of the notes are very short (100 ms or 500 ms), with occasional longer notes (2 sec or 5 sec). The dynamics are arbitrarily and equally distributed from ppp to fff, and the pitches are arbitrarily and equally distributed from low to high, with a variety of glissando ranges.

My contention is that even though the compositional decisions made by the computer are arbitrary and undistinguished in their own right, there is a sense of unity and consistency to the sounds being played, due to the constant use of single shapes for pitch glissando and amplitude envelope, and that this helps to give an impression of coherency and intentionality. That is presumably the main utility of motive in composition: it gives a sense of repetition, recognizable variation, and consistency to a variety of different sounds.